- AI-powered tenant screening tech is being criticized for potentially worsening housing discrimination.

- Landlords claim these tools combat fraud and evictions, but tenant advocates say they often misidentify tenants.

- Regulatory efforts are mounting to ensure fairness, including recent HUD guidance on AI usage in housing and multiple lawsuits.

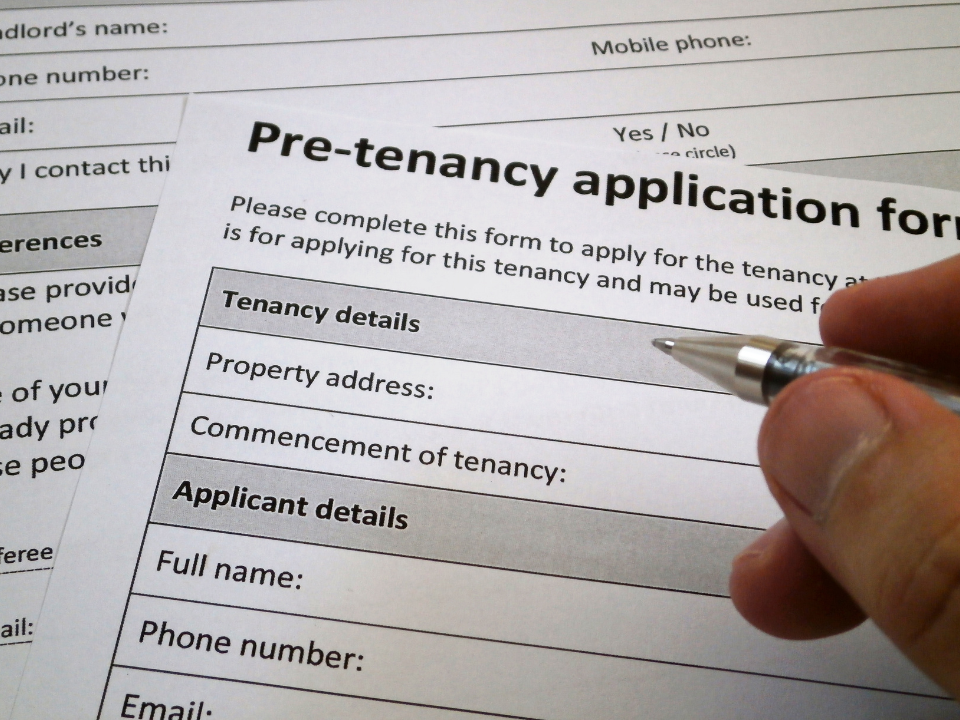

As reported in Bloomberg, AI-powered tenant screening technologies are growing more popular.

More landlords are using them to streamline applications, perform background checks, and assess potential renter risk profiles—but are these new solutions fair to renters?

Some Quick Context

AI-driven tenant screening algorithms are touted as an affordable way for landlords to reduce fraud, evictions, and paperwork, streamlining the entire tenant selection process.

But tenant advocacy groups and experts argue these tools often serve as a new barrier to housing. With opaque algorithms and limited ways for renters to dispute inaccurate findings, the screening process has raised concerns about potential violations of fair housing laws.

In some cases, prospective tenants report being denied without knowing why, despite having corrected or erroneous data.

Tech vs. Fair Housing

In 2023, Jacksonville Area Legal Aid filed a lawsuit against JWB, a North Florida landlord, alleging that the SafeRent screening tool disproportionately affected Black renters. Specifically, the screening tool flagged several tenants who had cleared or dropped eviction filings, yet they were still denied housing.

In other words, AI screening tools, while intended to reduce landlord risk, may actually auto-enable tenant discrimination.

A report by TechEquity, Screened Out of Housing, highlights how tenant screening technology is contributing to housing inequality. The $1.3B industry is vast, with over 2K companies relying on AI to sift through millions of tenant applications annually.

However, since most landlords rely on algorithmic scores without reviewing underlying data, prospective tenants—especially low-income renters—are often left in the dark about rejections.

Lack of Transparency

The crux of the issue lies in the opacity of the screening algorithms. Renters are rarely provided with insight into how their score is calculated or which company generated it. In fact, only 3% of renters reported knowing the name of the screening company involved in their denial.

The predictive models behind these tools remain proprietary, hidden under claims of trade secrets, making it difficult for renters to challenge inaccuracies. Advocates argue this lack of transparency leads to unfair housing outcomes.

Growing Scrutiny

In May 2023, the Department of Housing and Urban Development (HUD) issued new guidance requiring human review of tenant screening data to ensure compliance with fair housing laws.

The Consumer Financial Protection Bureau also fined TransUnion $23M in 2023 for inaccurate data in its tenant screening programs.

Other lawsuits, such as one in Connecticut involving CoreLogic, continue to push for more accountability when tenants are wrongfully denied housing due to screening tools.

Despite this scrutiny, the use of AI in tenant screening continues to rise. A survey by TechEquity found that two-thirds of landlords use these tools, with 37% relying solely on the algorithm’s recommendation to make rental decisions.

Tenant Impacts

For renters, these AI systems present more than just denial of housing—they also drive up costs. Many tenants pay screening fees that range from $25 to $175 per application, depending on the property.

The impact is more severe for marginalized groups. Black and Latino renters, for example, typically submit more applications (and incur higher fees) compared to White and Asian renters, according to Zillow data.

This combination of financial barriers and limited recourse for correcting errors further perpetuates systemic issues like residential segregation and housing inequality.

While the Fair Credit Reporting Act allows renters to challenge inaccuracies in their consumer profiles, it’s unclear whether these challenges impact the predictive scoring used by AI tools.

Future of Housing

As AI and algorithms continue to play a larger role in housing, tenant advocates are calling for greater transparency and regulatory oversight.

The recent HUD guidance emphasizes the need for civil rights monitoring and improved access for tenants to see and challenge their screening results.

Some states, like Massachusetts, are beginning to introduce regulations on tenant screening, a move that could signal broader reform efforts.